SMC samplers¶

This tutorial gives a basic introduction to SMC samplers, and explains how to run the SMC samplers already implemented in particles. For a more advanced tutorial on how to design new SMC samplers, see the next tutorial. For more background on SMC samplers, check either Chapter 17 of the book or Dau & Chopin (2022) for waste-free SMC. Arxiv version is here.

SMC samplers: what for?¶

A SMC sampler is a SMC algorithm that samples from a sequence of probability distributions \(\pi_t\), \(t=0,\ldots,T\) (and compute their normalising constants). Sometimes one is genuinely interested in each \(\pi_t\); more often one is interested only in the final distribution \(\pi_T\). In the latter case, the sequence is purely instrumental.

Examples of SMC sequences are:

\(\pi_t(\theta) = p(\theta|y_{0:t})\), the Bayesian posterior distribution of parameter \(\theta\) given data \(y_{0:t}\), for a certain model.

A tempering sequence, \(\pi_t(\theta) \propto \nu(\theta) L(\theta)^{\gamma_t}\) ,where the \(\gamma_t\)’s form an increasing sequence of exponents: \(0=\gamma_0 < \ldots < \gamma_T=1\). You can think of \(\nu\) being the prior, \(L\) the likelihood function, and \(\pi_T\) the posterior. However, more generally, tempering is a way to interpolate between any two distributions, \(\nu\) and \(\pi\), with \(\pi(\theta) \propto \nu(\theta) L(\theta)\).

We discuss first how to specify a sequence of the first type.

Defining a Bayesian model¶

To define a particular Bayesian model, you must subclass StaticModel, and define method logpyt, which evaluates the log-likelihood of datapoint \(Y_t\) given parameter \(\theta\) and past datapoints \(Y_{0:t-1}\). Here is a simple example:

[1]:

%matplotlib inline

from matplotlib import pyplot as plt

import seaborn as sb

import numpy as np

from scipy import stats

import particles

from particles import smc_samplers as ssp

from particles import distributions as dists

class ToyModel(ssp.StaticModel):

def logpyt(self, theta, t): # density of Y_t given theta and Y_{0:t-1}

return stats.norm.logpdf(self.data[t], loc=theta['mu'],

scale = theta['sigma'])

In words, we are considering a model where the observations are \(Y_t\sim N(\mu, \sigma^2)\) (independently). The parameter is \(\theta=(\mu, \sigma)\). Note the fields notation; more about this later.

Class ToyModel implicitly defines the likelihood of the considered model for any sample size (since the likelihood at time \(t\) is \(p^\theta(y_{0:t})=\prod_{s=0}^t p^\theta(y_s|y_{0:s-1})\), and method logpyt defines each factor in this product; note that \(y_s\) does not depend on the past values in our particular example). We now define the data and the prior:

[2]:

T = 30

my_data = stats.norm.rvs(loc=3.14, size=T) # simulated data

my_prior = dists.StructDist({'mu': dists.Normal(scale=10.),

'sigma': dists.Gamma()})

For more details about to define prior distributions, see the documentation of module distributions, or the previous tutorial on Bayesian estimation of state-space models. Now that we have everything, let’s specify our static model:

[3]:

my_static_model = ToyModel(data=my_data, prior=my_prior)

This time, object my_static_model entirely defines the posterior.

[4]:

thetas = my_prior.rvs(size=5)

my_static_model.logpost(thetas, t=2)

# if t is omitted, gives the full posterior

[4]:

array([-2.37897769e+02, -8.61877866e+01, -4.94969347e+02, -2.32511323e+05,

-2.63076061e+02])

The input of logpost and output of myprior.rvs() are structured arrays, that is, arrays with fields:

[5]:

thetas['mu'][0]

[5]:

-4.241375242422195

Typically, you won’t need to call logpost yourself, this will be done by the SMC sampler for you.

IBIS¶

IBIS (iterated batch importance sampling) is the standard name for a SMC sampler that tracks a sequence of partial posterior distributions; i.e. \(\pi_t\) is \(p(\theta|y_{0:t})\), for \(t=0,1,\ldots\).

Module smc_samplers defines IBIS as a subclass of FeynmanKac.

[6]:

my_ibis = ssp.IBIS(my_static_model, len_chain=50)

my_alg = particles.SMC(fk=my_ibis, N=20,

store_history=True, verbose=True)

my_alg.run()

t=0, ESS=30.38

t=1, Metropolis acc. rate (over 49 steps): 0.179, ESS=320.47

t=2, Metropolis acc. rate (over 49 steps): 0.265, ESS=739.71

t=3, ESS=495.11

t=4, Metropolis acc. rate (over 49 steps): 0.239, ESS=699.01

t=5, ESS=362.60

t=6, Metropolis acc. rate (over 49 steps): 0.357, ESS=855.81

t=7, ESS=563.24

t=8, ESS=429.52

t=9, Metropolis acc. rate (over 49 steps): 0.348, ESS=944.20

t=10, ESS=821.65

t=11, ESS=672.69

t=12, ESS=600.56

t=13, ESS=512.21

t=14, ESS=812.56

t=15, ESS=738.51

t=16, ESS=675.90

t=17, ESS=618.24

t=18, ESS=558.24

t=19, ESS=482.73

t=20, Metropolis acc. rate (over 49 steps): 0.349, ESS=972.66

t=21, ESS=925.96

t=22, ESS=889.08

t=23, ESS=970.02

t=24, ESS=950.08

t=25, ESS=732.06

t=26, ESS=714.42

t=27, ESS=453.15

t=28, Metropolis acc. rate (over 49 steps): 0.316, ESS=978.24

t=29, ESS=951.31

Note: we use option verbose=True in SMC in order to print some information on the intermediate distributions.

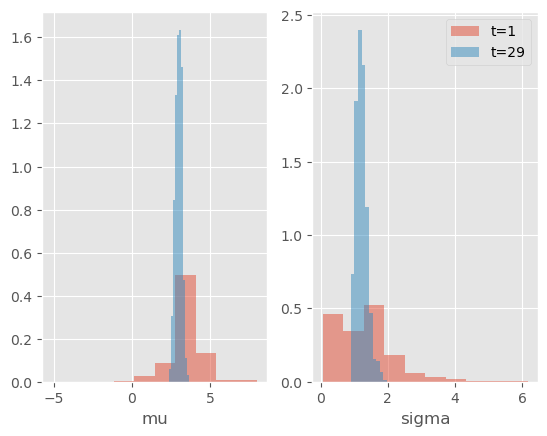

Note: Since we set store_history to True, the particles and their weights have been saved at every time (in attribute hist, see previous tutorials on smoothing). Let’s plot the posterior distributions of \(\mu\) and \(\sigma\) at various times.

[7]:

plt.style.use('ggplot')

for i, p in enumerate(['mu', 'sigma']):

plt.subplot(1, 2, i + 1)

for t in [1, 29]:

plt.hist(my_alg.hist.X[t].theta[p], weights=my_alg.hist.wgts[t].W, label="t=%i" % t,

alpha=0.5, density=True)

plt.xlabel(p)

plt.legend();

As expected, the posterior distribution concentrates progressively around the true values.

As always, once the algorithm is run, my_smc.X contains the final particles. However, object my_smc.X is no longer a simple numpy array. It is a ThetaParticles object, with attributes:

theta: a structured array (an array with fields); i.e.my_smc.X.theta['mu']is a (N,) array that contains the the \(\mu-\)component of the \(N\) particles;lpost: a 1D numpy array that contains the target (posterior) log-density of each of the particles;shared: a dictionary that contains “meta-data” on the particles; for instanceshared['acc_rates']is a list of the acceptance rates of the successive Metropolis steps.

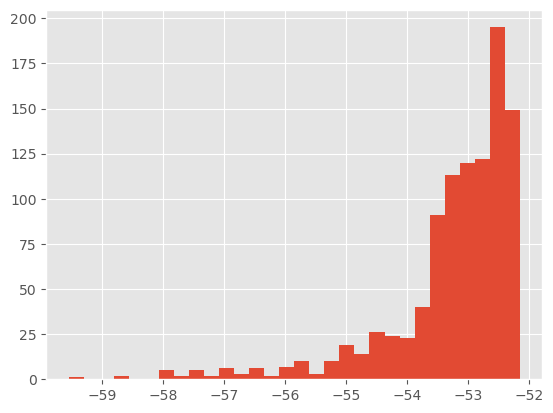

[8]:

print(["%2.f%%" % (100 * np.mean(r)) for r in my_alg.X.shared['acc_rates']])

plt.hist(my_alg.X.lpost, 30);

['18%', '26%', '24%', '36%', '35%', '35%', '32%']

You do not need to know much more about class ThetaParticles in practice (if you’re curious, however, see the next tutorial on SMC samplers or the documentation of module smc_samplers).

Waste-free versus standard SMC samplers¶

The library now implements by default waste-free SMC (Dau & Chopin, 2020), a variant of SMC samplers that keeps all the intermediate Markov steps (rather than “wasting” them). In practice, this means that, in the piece of code above:

at each time \(t\), \(N=20\) particles are resampled, and used as a starting points of the MCMC chains;

the MCMC chains are run for 49 iterations, hence the chain length is 50 (parameter

len_chain=50)and since we keep all the intermediate steps, we get 50*20 = 1000 particles at each iteration. In particular, we do O(1000) operations at each step. (At time 0, we also generate 1000 particles.)

Thus, the number of particles is actually N * len_chain; given this number of particles, the performance typically does not depend too much on N and len_chain, provided the latter is “big enough” (relative to the mixing of the MCMC kernels).

See Dau & Chopin (2020) for more details on waste-free SMC. If you wish to run a standard SMC sampler instead, you may set wastefree=False, like this:

[9]:

my_ibis = ssp.IBIS(my_static_model, wastefree=False, len_chain=11)

my_alg = particles.SMC(fk=my_ibis, N=100, store_history=True)

my_alg.run()

This runs a standard SMC sampler which tracks \(N=100\) particles; these particles are resampled from time to time, and then moved through 10 MCMC steps. (As explained in Dau & Chopin, 2020, you typically get a better performance vs CPU time trade-off with wastefree SMC.)

Single-run variance estimates¶

An advantage of waste-free SMC is that it gives you the possibility to estimate the (asymptotic) variance of a given estimate from a single run. Here is a quick example, but check also the documentation of smc_samplers.var_wf and the collectors smc_samplers.Var_logLt, smc_samplers.Var_phi for more details.

[10]:

phi = lambda x : x.theta['mu'] # scalar function

est = np.average(phi(my_alg.X), weights=my_alg.W)

var_est = ssp.var_wf(my_alg, phi)

print(f'estimate of E(mu]: {est}')

print(f'asymptotic variance of the above estimate: {var_est}')

print(f'95% confidence interval for E[mu] {est} +-{1.96 * np.sqrt(var_est / 1000)}')

estimate of E(mu]: 2.9956255516306944

asymptotic variance of the above estimate: 0.03999515708204

95% confidence interval for E[mu] 2.9956255516306944 +-0.012395377987232371

The confidence interval accounts for the Monte Carlo error (not the statistical error, which you would measure through the posterior variance). Classically, you must divide the asymptotic variance by the number of particles to get an estimate of the actual variance.

Regarding the MCMC steps¶

The default MCMC kernel used to move the particles is a Gaussian random walk Metropolis kernel, whose covariance matrix is calibrated automatically to \(\gamma\) times of the empirical covariance matrix of the particle sample, where \(\gamma=2.38 / \sqrt{d}\) (standard choice in the literature).

It is possible to specify a different value for \(\gamma\), or more generally other types of MCMC moves; for instance the following uses Metropolis kernels based on independent Gaussian proposals:

[11]:

mcmc = ssp.ArrayIndependentMetropolis(scale=1.1)

# Independent Gaussian proposal, with mean and variance determined by

# the particle sample (variance inflated by factor scale=1.1)

alt_move = ssp.MCMCSequenceWF(mcmc=mcmc)

# This object represents a particular way to apply several MCMC steps

# in a row. WF = WasteFree

alt_ibis = ssp.IBIS(my_static_model, move=alt_move)

alt_alg = particles.SMC(fk=alt_ibis, N=100,ESSrmin=0.2,

verbose=True)

alt_alg.run()

t=0, ESS=58.32

t=1, Metropolis acc. rate (over 9 steps): 0.383, ESS=423.19

t=2, ESS=165.63

t=3, Metropolis acc. rate (over 9 steps): 0.526, ESS=626.73

t=4, ESS=705.49

t=5, ESS=493.02

t=6, ESS=368.40

t=7, ESS=243.54

t=8, ESS=196.84

t=9, Metropolis acc. rate (over 9 steps): 0.748, ESS=942.05

t=10, ESS=801.91

t=11, ESS=622.29

t=12, ESS=531.48

t=13, ESS=426.85

t=14, ESS=807.19

t=15, ESS=727.41

t=16, ESS=655.57

t=17, ESS=586.12

t=18, ESS=514.90

t=19, ESS=439.62

t=20, ESS=376.15

t=21, ESS=319.90

t=22, ESS=322.03

t=23, ESS=403.06

t=24, ESS=351.35

t=25, ESS=486.05

t=26, ESS=443.87

t=27, ESS=442.08

t=28, ESS=445.23

t=29, ESS=412.93

In the future, the package may also implement other type of MCMC kernels such as MALA. It is also possible to define your own MCMC kernels, as explained in the next tutorial.

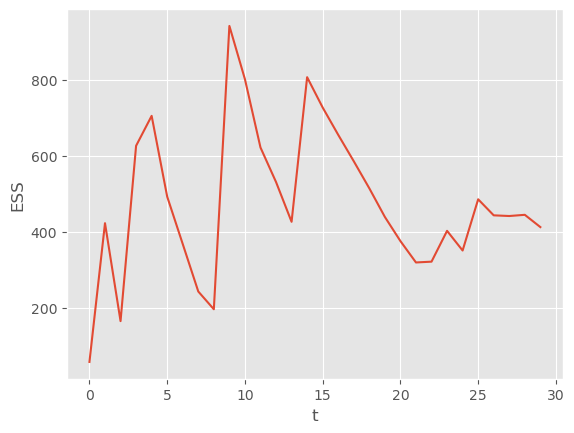

For now, note the following practical detail: the algorithm resamples whenever the ESS gets below a certain threshold \(\alpha * N\); the default value \(\alpha=0.5\), but here we changed it (to \(\alpha=0.2\)) by setting ESSrmin=0.2.

[12]:

plt.plot(alt_alg.summaries.ESSs)

plt.xlabel('t')

plt.ylabel('ESS');

As expected, the algorithm waits until the ESS is below 200 to trigger a resample-move step.

SMC tempering¶

SMC tempering is a SMC sampler that samples iteratively from the following sequence of distributions:

\begin{equation} \pi_t(\theta) \propto \pi(\theta) L(\theta)^\gamma_t \end{equation}

with \(0=\gamma_0 < \ldots < \gamma_T = 1\). In words, this sequence is a geometric bridge, which interpolates between the prior and the posterior.

SMC tempering implemented in the same was as IBIS: as a sub-class of FeynmanKac, whose __init__ function takes as argument a StaticModel object.

[13]:

fk_tempering = ssp.AdaptiveTempering(my_static_model)

my_temp_alg = particles.SMC(fk=fk_tempering, N=1000, ESSrmin=1.,

verbose=True)

my_temp_alg.run()

t=0, ESS=5000.00, tempering exponent=0.000798

t=1, Metropolis acc. rate (over 9 steps): 0.270, ESS=5000.00, tempering exponent=0.0111

t=2, Metropolis acc. rate (over 9 steps): 0.245, ESS=5000.00, tempering exponent=0.062

t=3, Metropolis acc. rate (over 9 steps): 0.253, ESS=5000.00, tempering exponent=0.227

t=4, Metropolis acc. rate (over 9 steps): 0.291, ESS=5000.00, tempering exponent=0.788

t=5, Metropolis acc. rate (over 9 steps): 0.333, ESS=9573.62, tempering exponent=1

Note: Recall that SMC resamples every time the ESS drops below value N times option ESSrmin; here we set it to to 1, since we want to resample at every time. This makes sense: Adaptive SMC chooses adaptively the successive values of \(\gamma_t\) so that the ESS equals a certain value (\(N/2\) by default).

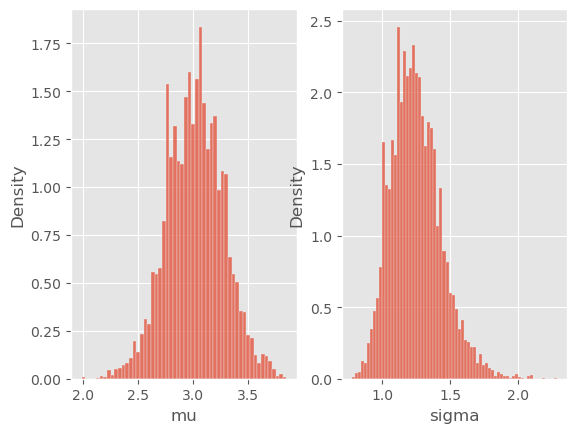

We have not saved the intermediate results this time (option store_history was not set) since they are not particularly interesting. Let’s look at the final results:

[14]:

for i, p in enumerate(['mu', 'sigma']):

plt.subplot(1, 2, i + 1)

sb.histplot(my_temp_alg.X.theta[p], stat='density')

plt.xlabel(p)

This looks reasonable! You can see from the output that the algorithm automatically chooses the tempering exponents \(\gamma_1, \gamma_2,\ldots\). In fact, at iteration \(t\), the next value for \(\gamma\) is set that the ESS drops at most to \(N/2\). You can change this particular threshold by passing argument ESSrmin to TemperingSMC. (Warning: do not mistake this with the ESSrmin argument of class SMC):

[15]:

lazy_tempering = ssp.AdaptiveTempering(my_static_model, ESSrmin = 0.1)

lazy_alg = particles.SMC(fk=lazy_tempering, N=1000, verbose=True)

lazy_alg.run()

t=0, ESS=1000.00, tempering exponent=0.037

t=1, Metropolis acc. rate (over 9 steps): 0.246, ESS=1000.00, tempering exponent=0.79

t=2, Metropolis acc. rate (over 9 steps): 0.335, ESS=9537.70, tempering exponent=1

The algorithm progresses faster this time, but the ESS drops more between each step. Another optional argument for Class TemperingSMC is options_mh, which works exactly as for IBIS, see above. That is, by default, the particles are moved according to a certain (adaptative) number of random walk steps, with a variance calibrated to the particle variance.

Again, all the indications above should be sufficient if you simply want to run a SMC sampler with random-walk Metropolis steps. If you are interested in more exotic SMC samplers (based on different MCMC kernels, or such that the state-space is not \(\mathbb{R}^d\)), see the next tutorial on SMC samplers.